After space travel, one of the most loved themes of science fiction is robots. Many people, going back centuries, have imagined creating artificial people. Writers of robot stories have seldom explored the technical details behind what it means to create a thinking being, they just assumed it will be done – in the future. Since the 1950s artificial intelligence has been a real academic pursuit, and even though scientists have produced machines that can play chess and Jeopardy, many people doubt the possibility of ever building a machine that knows it’s playing chess or Jeopardy.

I disagree, although I have no proof or authority to say so. Let’s just say if I was to bet money on which will come first, a self-aware thinking machine or a successful manned mission to Mars, I put my money on arrival of thinking machines. I’m hoping for the both sometimes before I die, and I’m 61.

There is a certain amount of basic logic involved in predicting intelligent machines. If the human mind evolved through random events in nature, and intelligence emerged as a byproduct of ever growing biological complexity, then it’s easy to suggest that machine intelligence can evolve out the development of ever growing computer complexity.

However, there’s talk on the net about the limits of high performance computing (HPC), and the barriers of scaling it larger – see “Power-mad HPC fans told: No exascale for you – for at least 8 years” by Dan Olds at The Register. The current world’s largest computer needs 8 megawatts to crank out 18 petaflops, but to scale it up to an exaflop machine, would require 144 megawatts of power, or a $450 million dollar annual power bill. And if current supercomputers aren’t as smart as a human, and cost millions to run, is it very likely we’ll ever have AI machine or android robots that can think like a man? It makes it damn hard to believe in the Singularity. But I do. I believe intelligent machines are one science fictional dream within our grasp.

[click on photos for larger images]

Titan is the current speed demon of supercomputers, and is 4352 square feet in size. Even if all it’s power could be squeezed into a box the size of our heads, it wouldn’t be considered intelligent, not in the way we define human intelligence. No human could calculate what Titan does, but it’s still considered dumb by human standards of awareness. However, I think it’s wrong to think the road to artificial awareness lies down the supercomputer path. Supercomputers can’t even do what a cockroach does cognitively. They weren’t meant to either.

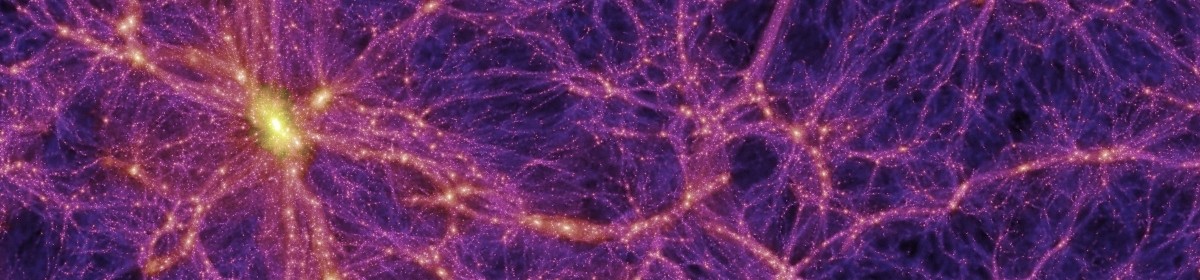

It’s obvious that our brains aren’t digital computers. Our brains process patterns and are composed of many subsystems, whose sum are greater than the whole. Self-aware consciousness seems to be a byproduct of evolutionary development. The universe has always been an interaction between its countless parts. At first it was just subatomic particles. Over time the elements were created. Then molecules, which led to chemistry. Along the way biology developed. As living forms progressed through the unfolding of evolutionary permutations, various forms of sensory organs developed to explore the surrounding reality. Slowly the awareness of self emerged.

There are folks who believe artificial minds can’t be created because minds are souls, and souls come from outside of physical reality. I don’t believe this. One proof I can give is we can alter minds by altering their physical bodies.

To create artificial beings with self-awareness we’ll need to create robots with senses and pattern recognition systems. My guess is this will take far less computing power than people currently imagine. I think the human brain is based on simple tricks we’ve yet to discover. It’s three pounds of gray goo, not magic.

Human brains don’t process information anywhere near as fast as computers. We shouldn’t need exascale supercomputers to recreate human brains in silicon. We need a machine that can see, hear, touch, smell, taste, and can learn a language. Smell, touch and taste might not be essential. One thing I seldom see discussed is learning. It takes years for a human to develop into a thinking being. Years of processing patterns into words and memories. If we didn’t have language and memory would we even be self-aware? If it takes us five years to learn to think like a five-year-old, how long will it take a machine?

And if scientists spend years raising up an artificial mind that thinks and is conscious, can we turn it off? Will that be murder? And if we turn it off and then back on, will it be the same conscious being as before? How much of our self-awareness is memory? Can we be a personality if we only have awareness of the moment? Won’t self-awareness need a kind of memory that’s different from hard drive type memory?

I believe intelligent, self-aware machines could emerge in our lifetimes, if we all live long enough. I doubt we’ll see them by 2025, but maybe by 2050. Science fiction has long imagined first contact with an intelligent species from outer space, but what if we make first contact with beings we created here on Earth? How will that impact society?

There have been thousands of science fiction stories about artificial minds, but I’m not sure many of them are realistic. The ones I like best are: When HARLIE Was One by David Gerrold, Galatea 2.2 by Richard Powers and the Wake, Watch Wonder Trilogy by Robert J. Sawyer.

These books imagine the waking of artificial minds, and their growth and development. Back in the 1940s Isaac Asimov suggested the positronic brain. He assumed we’d program the mechanical brain. I believe we’ll develop a cybernetic brain that can learn, and through interacting with reality, will develop a mind and eventual become self-aware. What we need is a cybercortex to match our neocortex. We won’t need an equivalent for the amygdala, because without biology our machine won’t need those kinds of emotions (fear, lust, anger, etc.). I do imagine our machine will develop intellectual emotions (curiosity, ambition, serenity, etc.). An interesting philosophical question: Can there be love without sex? Maybe there are a hundred types of loves, some of which artificial minds might explore. And I assume the new cyber brains might feel things we never will.

In the 19th century there were people who imagined heavier than air flight long before it happened. Now I’m not talking a prophecy. Most people before October 4, 1957 would not have believed that man would land on the Moon by 1969. I supposed we can pat science fiction on the back for preparing people for the future and inspiring inventors, but I don’t know if that’s fair. Rockets and robots would have been invented without science fiction, but science fiction lets the masses play with emerging concepts, preparing them for social change.

My guess is a cybercortex will be invented accidently sometime soon leading to intelligent robots that will impact society like the iPhone. These machines with the ability to learn generalized behavior might not be self-aware at first, but they will be smart enough to do real work – work humans like to do now. And we’ll let them. For some reason, we never say no to progress.

I’m not really concerned cybernetic doctors and lawyers. I’m curious what beings with minds that are 2x, 5x, 10x or 100x times smarter than us will do with their great intelligence. I do not fear AI minds wiping us out. I’m more worried that they might say, “Want me to fix that global warming problem you have?” Or, “Do you want me to tell the equations for the grand unified theory?”

How will we feel if we’re not the smartest dog around?

JWH – 5/19/13

Great post Jim. I personally think that machine intelligence is our next stage of evolution. If/when the level of machine intelligence you discuss above comes about, I’m hopeful that it can be used to make humanity smarter through machine/biology interfaces…we need it. Biology is messy though so it may not ever come about. Interesting subject either way and I’m excited about watching advancements within this area in coming years.

I also think machine minds are descendants. I think they will also be the true space explorers. I do not think humans are the crown of creation. We’re just one step along the path. I believe humans will inherit the Earth, and probably Mars, but machines are better suited to live in the rest of the solar system.

Anyone who thinks cats aren’t self-aware hasn’t spent enough time with one. Dolphins can recognize themselves in a mirror. Chimps use tools and parrots have demonstrated language skills. Humans aren’t as special as some people think.

I agree. I think consciousness is a spectrum and humans are a continuation of what’s come before in the animals kingdom. I think we’re different because we have a language to think about ourselves. I think some animals have a proto-language, and even language skills are part of a continuum.

Well written and thought provoking. However, I wonder without the sense of smell, touch and taste in the development of artificial intelligence if it will ever become truly sentient. Often times I believe that the sensory input from the so called minor senses is the tapestry upon which the brain paints the narrative that we interpret as reality. Which, as the article points out, would leave an artificial entity with a different view of nature, and it’s place in it.

Actually, we have very powerful artificial taste, touch and smell devices now that could be incorporated into an artificial being. We could build a machine that could detect smells better than any animal, or taste chemicals in parts per billions, or see far beyond the visible spectrum that humans can.

I have no idea when it comes to self-aware machines, though I don’t see any reason why it couldn’t happen, in principle. I don’t know why we’d want to make machines like human beings, though. We’ve already got human beings, so wouldn’t it be like reinventing the wheel? What would be the point?

Computers can do things we can’t. That’s the big advantage they give us. Robots would certainly be useful, but, again, it would be because they could do things we can’t.

OK, they could also do things which are too dangerous or too disagreeable for human beings. But if that were the case, we still wouldn’t want imitation humans, because they’d be people, then. And that would rule them out for jobs like that.

For other things, I suspect that we might just link human brains to machines, to augment our abilities. There’s no reason to invent human brains when we already have them. Other jobs don’t require a self-aware brain, so it would be a waste to create self-aware robots for them. And it would be unethical to create self-aware machines just for our own amusement.

I don’t know. It’s fun to speculate about such things. But I’m not even going to pretend to forecast the future, because there’s no way we’ll get it right. It’s fun to look at the possibilities, though.

Very nice post. While its true that the architecture and functioning of living of brains is very different from that of digital computers, it might be possible to simulate the brain and its functions in a computer (artificial neural networks etc.). If that proves successful, then a mind of some sort might emerge from that simulation. The Human Brain Project is a modern attempt at such a simulation using supercomputers: http://www.humanbrainproject.eu/index.html

MSS

You gotta have The Two Faces of Tomorrow by James P. Hogan

http://www.baenebooks.com/chapters/0671878484/0671878484.htm

hi all, any one read “the third kind” i think that was the title